Introduction to Large Language Models

Learn human-centric explanations of large language models and related technologies like prompting and QA models

Large Language Models (LLMs) are a subset of Deep Learning, similar to Generative AI, that focus on generating text. They are powerful models that can be pre-trained on large datasets and then fine-tuned for specific tasks or domains.

I'll cover tuning and fine-tuning in a bit more detail at the end but feel free to jump right to it if you prefer.

Pre-trained - In this context, this means that the models are already trained with huge datasets for basic capabilities like text generation, document summary, Q&A, text classification etc. However, they can be further speciliazed to specific areas or domains by retraining them with smaller domain specific datasets like trading, retail, finance, real-estate etc.

Some popular examples of Large Language Models include GPT, LaMDA, and PaLM, each with its own unique characteristics and architecture. To leverage LLMs effectively, lets talk a bit about prompting.

Prompting

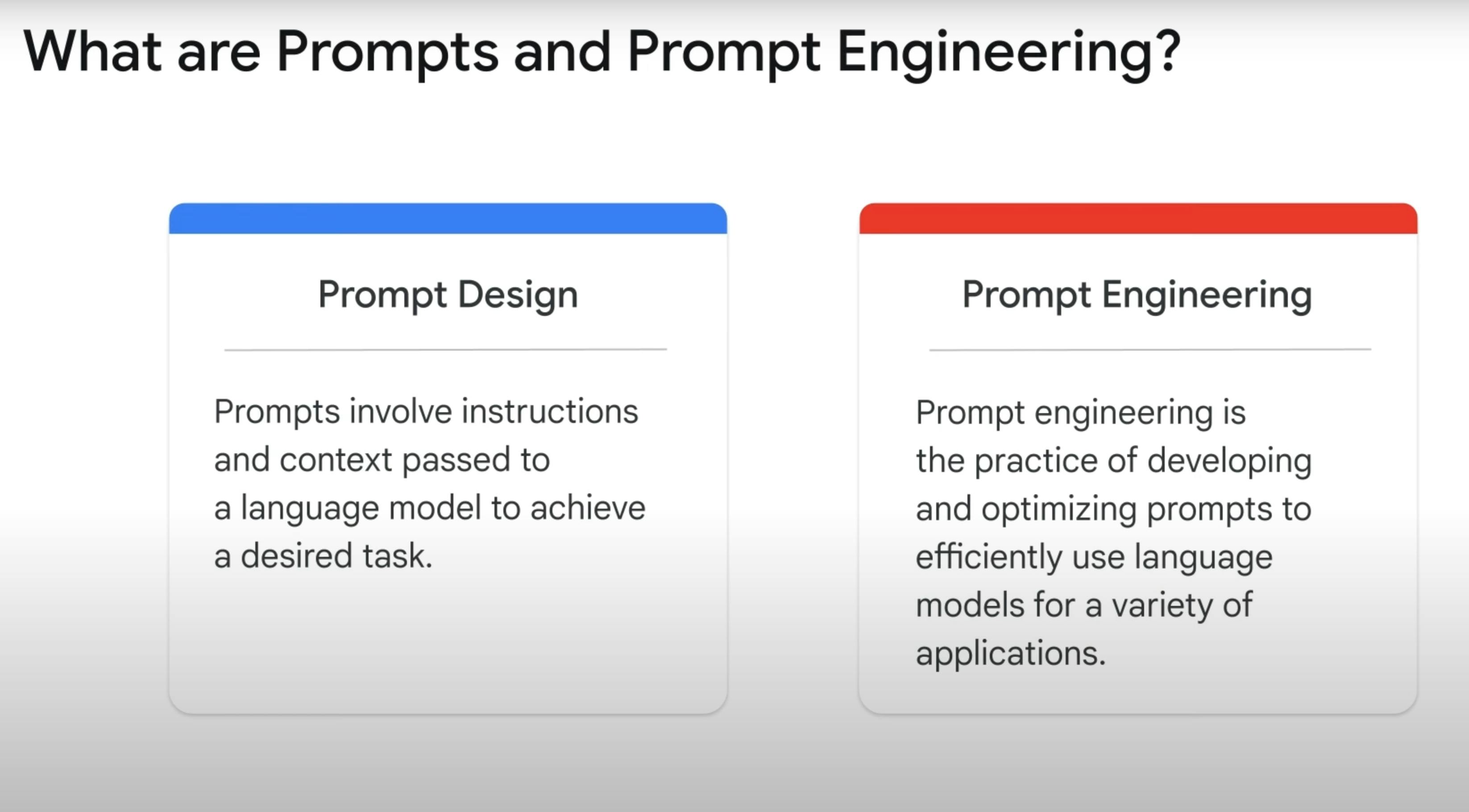

A prompt is like a question or a request you make to a computer program, just like you would a human being. There are two major areas here and I have seen a lot of people confuse one for the other. Prompt Design and Prompt Engineering. They are two closesly related concepts in natural language processing that involve the process of creating prompts that are clear, concise and informative.

Prompt Design is the process of creating a prompt that is tailored to the specific task that the systems is being asked to perform. For instance, if the system is being asked to translate a text from English to French, then the pompt should be written in English and the prompt should specify that it should be translated to French.

A human-centric way of thinking about it is like making a special recipe for a cake. You need to tell the baker exactly what ingredients to use, how much of it to use, and what steps to follow. In prompt design, you're doing something similar. You are giving clear, detailed instructions to the computer so it knows exactly what you want. The more specific and clear you are, the better the computer can understand and do what you ask.

Prompt Engineering however, is the process of creating a prompt that is designed to improve the performance of the system. This may include providing domain specific knowledge, providing examples of the desired output, or using/figuring out keywords that are known to be effective for the specific system.

In the example of the baker and recipe, this would be like becoming the master recipe writer. You start experimenting with different ways to write your instructions (prompts) to make the baker do more complex or better things. You learn what works best, like finding out that adding a bit of cinnamon makes your cake taste amazing.

You learn how to ask the right questions or give the right instructions to make the computer do amazing things, and how to effectively leverage the capabilities of different models via your prompts.

Question Answering

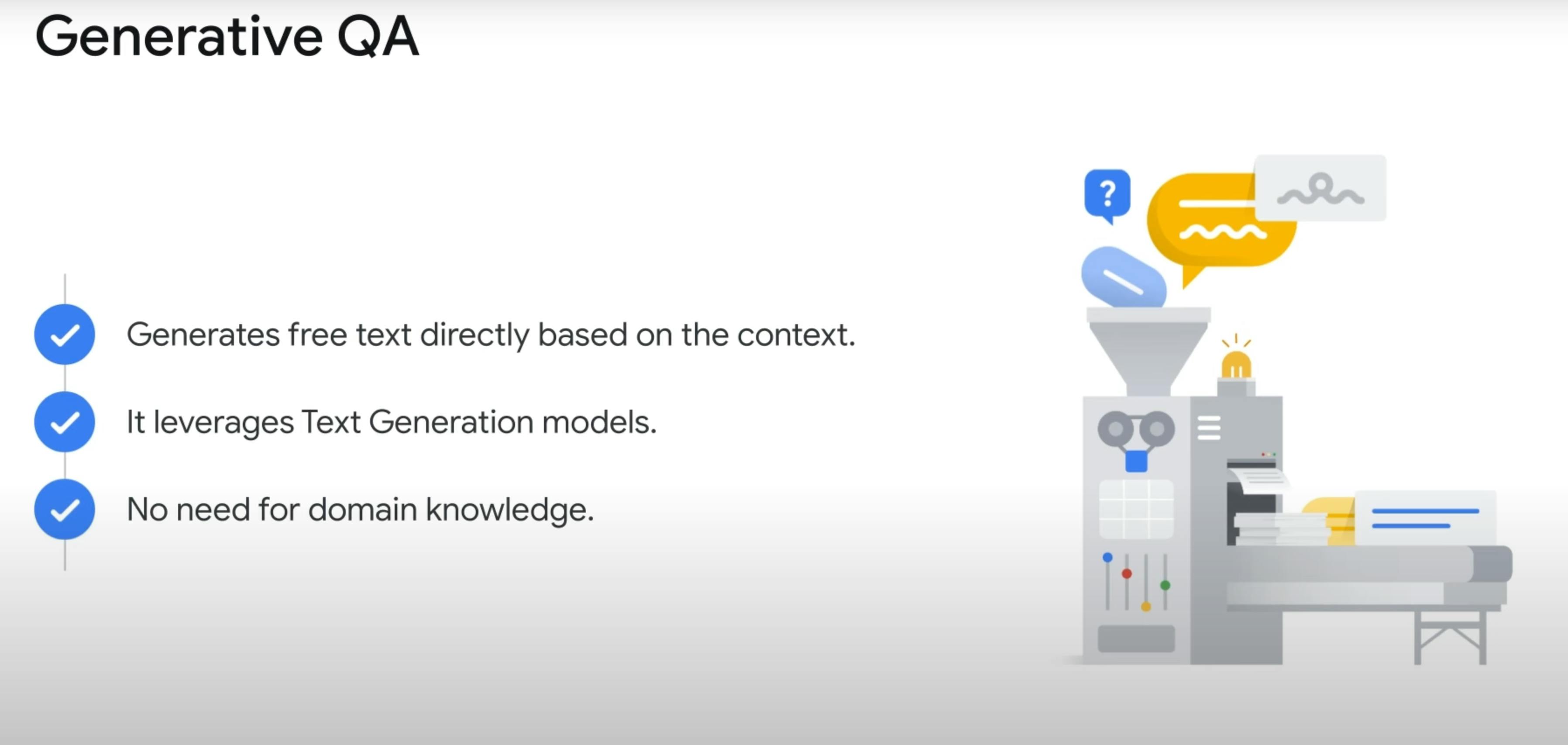

Prompting is a key component in Natural Language Processing (NLP) and in LLMs as they provide us, humans, a way to interact with LLMs and make them do the things we want. A good example of how you can take advantage of LLMs is by asking it a question, this is called Question Answering. Question Answering is a subfield of Natural Language Processing that deals with the task of automatically answering questions posed in a natural language. There are primarily two kinds of Question Answering Models - Generative and Retrieval-based models. Depending on the model used, the answer can be directly extracted from a document or generated from scratch.

Generative Models

Generative models, like chatGPT, generate new content based on the input they receive. When you ask a question, these models don't just look for existing answers; they create new responses by understanding and processing the language in your question.

Retrieval-based Models

Retrieval-based models work differently. Instead of creating new content, they search through a large database of information (like books, articles, or the internet) to find existing answers. They're like super-efficient librarians who know exactly where to find the information you're looking for.

The best way to leverage both models is by designing very good prompts that are clear, concise and informative. Now that we ubnderstand LLMs a bit more and their relationship to prompting and answering questions, let's look at tuning and fine-tuning to better understand them and their differences.

Tuning and Fine Tuning

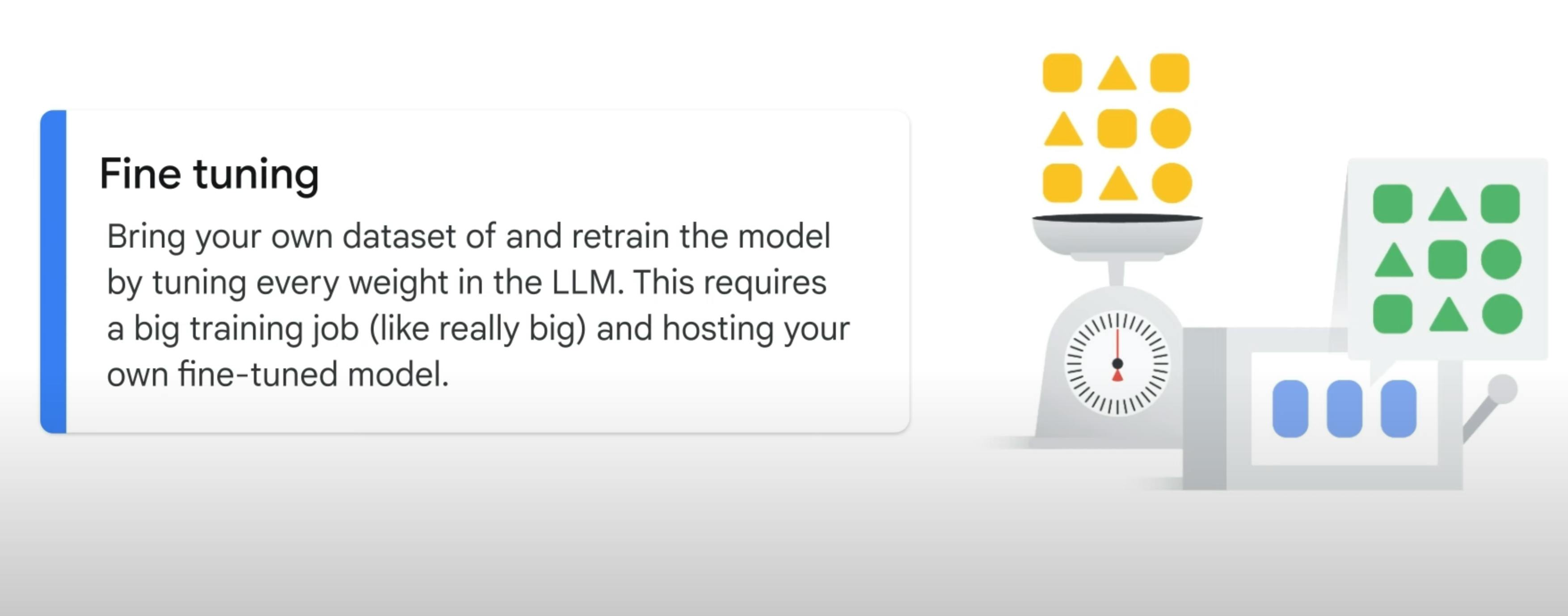

Tuning is the process of adapting a pretrained model to a specific domain or to a set of custom use cases by training the model on new data. Fine-tuning however, invloves the process of retraining the model with your own data by tuning every weight in the LLM. This requires a big training job and hosting your own fine-tuned models and can cost as much as $3 Million. So, fine-tuning is necessary but expensive and not realistic in many cases, hence the need more efficient methods of tuning like - PETM.

PETM is a method for tuning an LLM on your custom data without duplicating the model. This method ensures that the base model is not altered, such that only a small number of add-on layers are tuned, and they can be swapped in and out at inference time.

Platforms like Generative AI Studio leverages this method to let you quickly explore and customize Gen AI Models on Google Cloud. They provide a variety of tools and resources that make it easy for developers to create and deploy Generative AI models with ease. One example of these tools is the Vertex AI Search and Conversation tool that allows developers to create Generative AI Apps with little to no coding and no ML expereince. With it, you create, fine-tune, and deploy LLMs for various kinds of applications, from chatbots to custom search engines.

Why LLMs are a big deal

There's a lot of technologies and interesting concepts in the AI landscape but LLMs seem to be at the tip of every tongue and there's a reason for it. They are multi purpose, and form the right foundation for everything else to build upon:

A single model can be used for different tasks (text generation, document summary, Q&A, text classification)

The fine-tuning process for LLMs requires minimal field data

The performance continues to grow with more training

Conclusion

This post is really a summary of my notes from taking the intro to LLMs course on Google and my attempt to reduce the barrier to entry into AI by explaining these concepts in a way that everyone can understand. There are a lot more things about LLMs (different kinds of LLMs, Transformer models, etc) that I haven't covered here and you should probably research more on if you're interested in the topic.